Cloud computing and artificial intelligence: Two technologies that were made for each other.

Well, not quite. To date, cloud-based AI remains a work in progress. The long-term forecast remains rosy as the pieces of the AI puzzle fall into place. What is uncertain is which of the most promising AI platforms will realize the technology's potential. Here's a look at where the AI platform leaders are today, and how they are likely to stack up in the future.

Amazon's big lead in the cloud gives it a leg up in the AI sweepstakes

If the history of the technology industry teaches one lesson, it's that an early lead in a new product category can evaporate faster than you can say "Netscape" or "MySpace." Still, no company appears to be ready to challenge the substantial lead Amazon AWS has in the cloud. If you think this fact will prevent competitors from taking on the company in AI, well, you don't know tech.

The recent joint announcement by Amazon founder and CEO Jeff Bezos and Microsoft CEO Satya Nadella that their AI-based voice assistants -- Amazon's Alexa and Microsoft's Cortana -- would work together caught the attention of the press. TechCrunch's Natasha Lomas writes in an August 30, 2017, article that despite the clunkiness of one voice assistant calling the other, the deal brings together Cortana's business and productivity focus with Alexa's emphasis on consumer e-commerce and entertainment.

In an August 30, 2017, interview with the New York Times' Nick Wingfield, Bezos states that people will turn to multiple voice assistants for information, depending on the subject matter. That's why it makes sense for Alexa to depend on Cortana when someone needs to access data that resides in Outlook rather than build a direct link for Alexa to the Microsoft productivity app.

Yet the Amazon AI projects getting all the press are the "showy ones," according to Bezos in an interview with Internet Association CEO Michael Beckerman. As GeekWire's Todd Bishop reports in a May 6, 2017, article, Bezos states that machine learning and AI will usher in a "renaissance" and "golden age" in which these "advanced techniques" will be accessible to all organizations, "even if they don’t have the current class of expertise that’s required."

The Amazon AI portfolio: Something for everyone

The cloud leader has assembled a formidable lineup of AI services to offer its customers. Topping the list is the AWS Deep Learning AMI (Amazon Machine Image) that simplifies the process of creating "managed, auto-scaling clusters of GPUs for training and inference at any scale." The product is pre-installed with Apache MXNet, TensorFlow, Caffe2 (and Caffe), Theano, Torch, Microsoft Cognitive Toolkit, Keras, and other deep learning tools and drivers, according to the company.

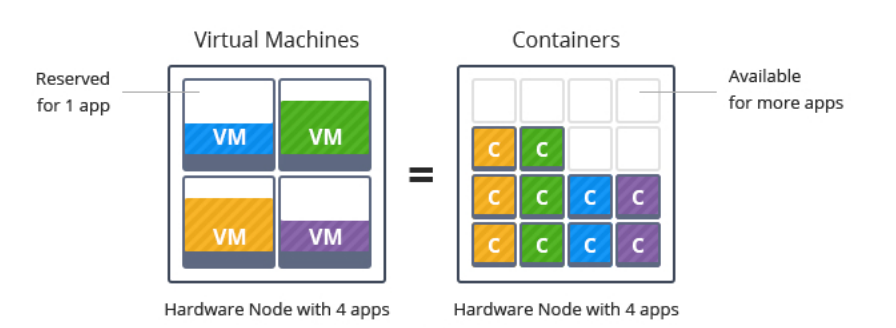

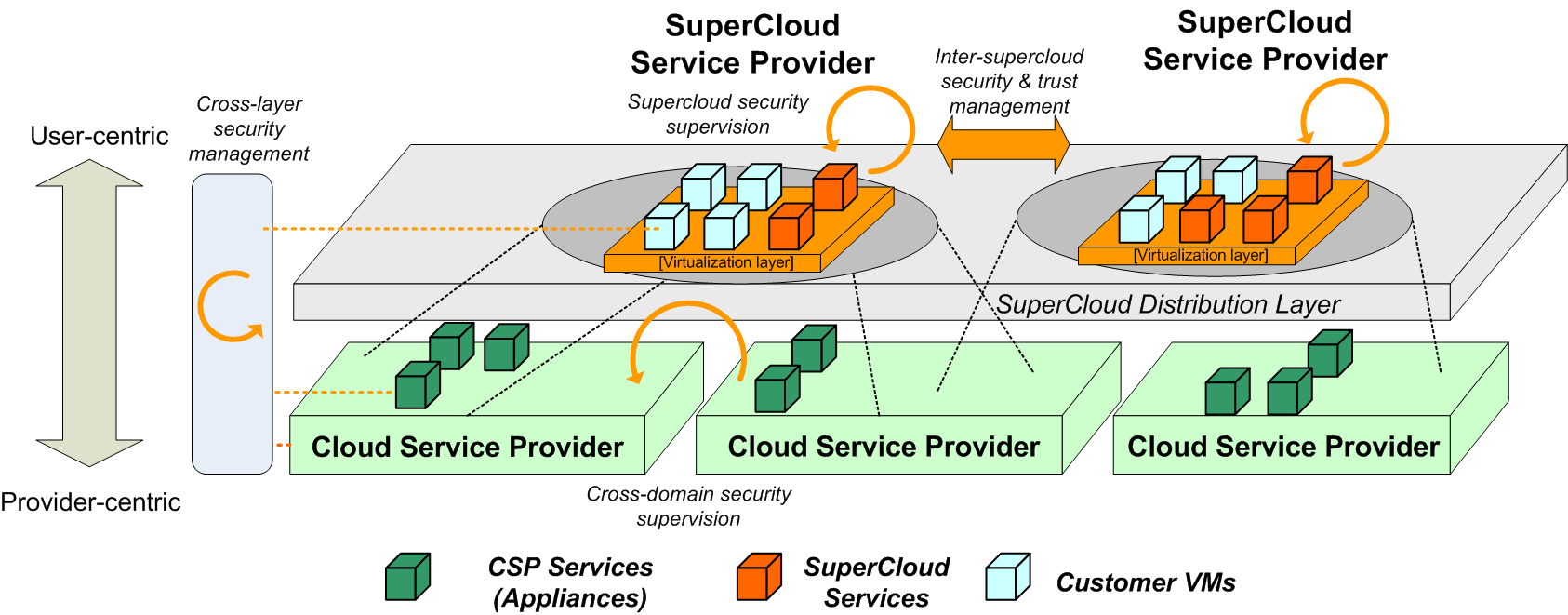

![]()

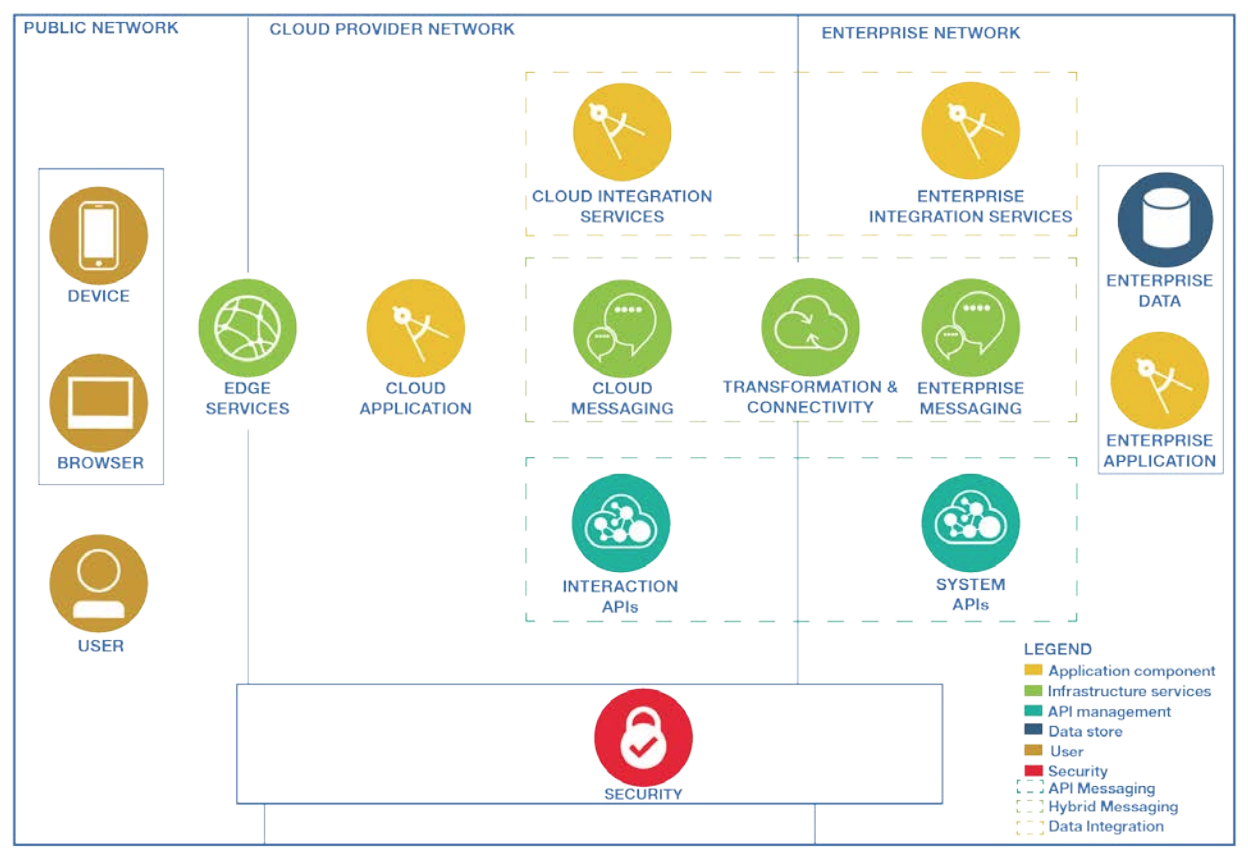

Amazon's AI offerings are separated into three layers to address the varying requirements and expertise levels of its business customers. Source: Amazon, via TechRepublic

The AWS Deep Learning CloudFormation template uses the Amazon Deep Learning AMI to facilitate setting up a distributed deep learning cluster, including the EC2 instances and other AWS resources the cluster requires. Three API-driven AI services are designed to let developers add AI features to their apps via API calls:

- Amazon Lex uses Alexa's automatic speech recognition (ASR) and natural language understanding (NLU) to add "conversational interfaces," or chatbots, to your apps

- Amazon Polly converts text to "lifelike" speech in more than two dozen languages and in both male and female voices

- Amazon Rekognition uses the image-analysis features of Amazon Prime Photos to give apps the ability to identify objects, scenes, and faces in images; it can also compare faces between images

Two other Amazon AI tools are intended to help developers and data scientists create models that can be deployed and managed with minimum overhead. Amazon Machine Learning offers visualization tools and wizards that allow machine learning models to be devised without having to deal with complex algorithms. Apache Spark on Amazon EMR (Elastic MapReduce) serves up a managed Hadoop framework for processing data-intensive apps across EC2 instances.

IBM Watson: Off to a slow start, but still a top contender

The consensus is that IBM Watson is over-hyped. Maybe Watson's critics are simply impatient. Artificial intelligence requires a great deal of data. The most efficient place for that data to reside is in the cloud. As the cloud grows, it provides AI systems such as Watson with the space they need to blossom. At present, much of the deep data Watson needs to thrive remains missing in action.

in a June 28, 2017, article, Fortune's Barb Darrow explains that Watson isn't a single service, which confounds some customers. Instead, Watson is "a set of technologies that need[] to be stitched together at [the customer's] site." You're buying "a big integration project" rather than a product, according to Darrow.

Much can be learned from what appear to be Watson failures, Darrow writes. For example, MIT Tech Review found that the cause of M.D. Anderson Cancer Center's decision to cancel a collaboration with Watson Health was a shortage of the data Watson needs to be "trained."

The Register's Shaun Nichols, in a July 19, 2017, article, points out another shortcoming of IBM's AI platform. Watson is noted for being "tricky to use compared to the competition." More importantly, IBM is "losing the war for AI talent," which reduces the chances that the company will be able to develop competitive AI products in the future.

In an August 25, 2017, article, TechTarget's Mekhala Roy quotes Ruchir Puri, IBM Watson chief architect: "AI and the cloud are two sides of the same coin." Because of cloud services, more developers have access to AI in a "very consumable way," according to Puri. This includes middle school students using Watson as part of a robotics project, for example.

As AI tools reach a broader swath of users, Watson stands poised to serve as the AI platform for the masses... eventually.

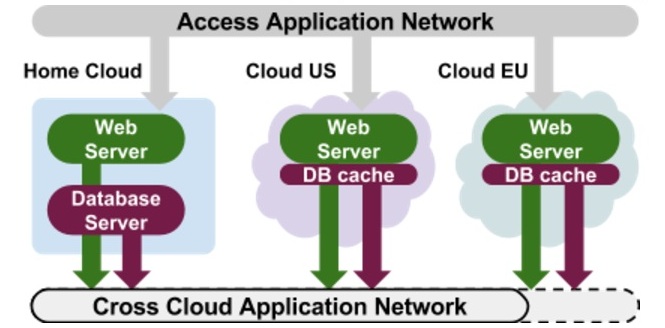

Google Cloud Machine Learning Engine and DeepMind

Google's initial foray into providing businesses with a platform for their AI initiatives is the Google Cloud Machine Learning Engine, which the company describes as a managed service for building "machine learning models that work on any type of data, of any size." The service depends on the AI-learning TensorFlow framework that also underpins Google Photos, Google Cloud Speech, and other products from the company.

Like IBM's Watson, Google Cloud Machine Learning Engine is a collection of parts that customers assemble as their needs dictate. As TechTarget's Kathleen Casey reports in a July 2017 article, the service's four primary components are a REST API, the gcloud command-line tool, the Google Cloud Platform Console, and the Google Cloud Datalab. The ability to integrate with such Google services as Google Cloud Dataflow and Google Cloud Storage adds the processor and storage elements required to create machine-learning apps.

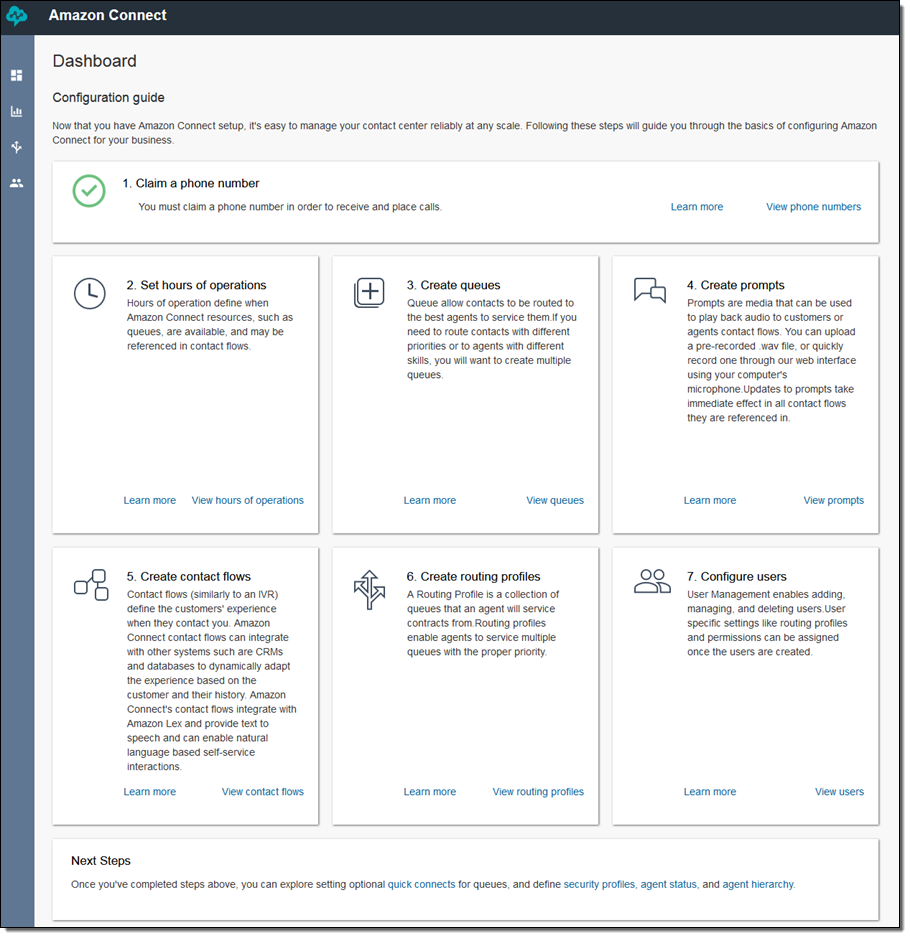

![]()

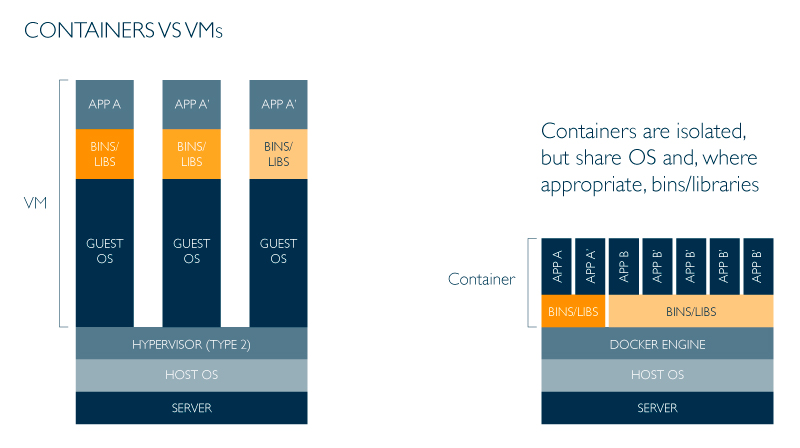

The four leading cloud machine-learning vendors take very different approaches in their attempts to dominate the burgeoning field of cloud AI. Source: TechTarget

NetworkWorld's Gary Eastwood writes in a May 8, 2017, article that in addition to making TensorFlow open source, Google provides businesses that aren't able to create their own custom machine-learning models with "pretrained models." According to the Next Platform's Jeffrey Burt in a March 9, 2017, article, Google hopes to leverage its cloud AI initiatives to close the gap between it and the two current cloud leaders, Amazon AWS and Microsoft Azure.

No one doubts Google's commitment to ruling AI in the cloud. As Burt points out, the company has invested $30 billion in its cloud strategy. Eric Schmidt, executive chairman of Google's Alphabet parent, is quoted as saying Google has "the money, the means, and the commitment to pull off a new platform of computation globally for everyone who needs it. Please don’t attempt to duplicate this. You have better uses [for] your money.”

Microsoft Project Brainwave/Cognitive Toolkit

Making AI more accessible is a common theme among the platform contenders. In this regard, Microsoft has credentials its AI competitors lack. Microsoft calls its new Project Brainwave a "deep learning acceleration platform," according to TechRadar's Darren Allen in an August 23, 2017, article. Using Intel’s Stratix 10 field programmable gate array hardware accelerator, the company promises "ultra-low latency" data transfers and "effectively" real-time AI calculations.

Project Brainwave supports Microsoft's Cognitive Toolkit and Google's TensorFlow; support for Azure customers is expected soon, according to the company. In an August 25, 2017, post in TechNewsWorld, Richard Adhikari describes Project Brainwave's three primary layers:

- High-performance, distributed system architecture

- Deep neural network (DNN) engine synthesized onto field programmable gate arrays

- Compiler and runtime for low-friction deployment of trained models

According to Adhikari, Microsoft's AI platform is designed to benefit from the company's Project Catapult "massive FPGA infrastructure" that has been added in recent years to Azure and Bing. It's worth noting that Microsoft chose to use FPGAs rather than chips optimized for a specific set of algorithms, which is the approach Google took with its Tensor Processing Unit. Forbes' Aaron Tilley writes in an August 25, 2017, article that this gives Microsoft more flexibility, which is an important feature considering the fast pace of change in deep learning.

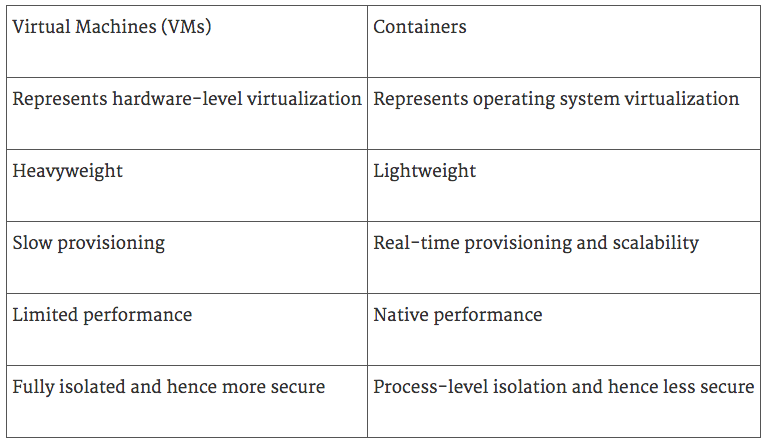

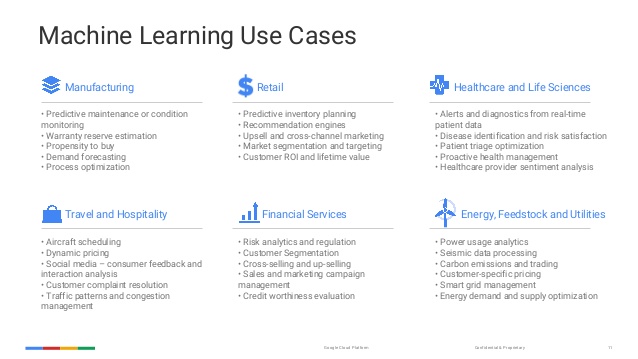

Seeking Alpha's Motek Moyen agrees that Project Brainwave's flexibility is an advantage over Google's TPU. In an August 29, 2017, article, Moyen states that future-proofing its AI platform will allow Microsoft to challenge Amazon's AWS as the number one AI provider. While Amazon maintains its lead in the IaaS space, Microsoft holds the top spot in the enterprise SaaS category.

![]()

Amazon's tremendous lead in the market for cloud infrastructure services could be challenged by Microsoft's Project Brainstorm and other AI-based platforms. Source: Synergy Research Group, via Seeking Alpha

In the AI horserace, don't rule out late-closing Facebook, Apple

When members of Facebook's AI Research (FAIR) team spoke at a recent NVidia GPU tech conference in San Jose, CA, they focused on the work being done at the company to advance the field in general, particularly "longer-term academic problems," according to the New Stack's TC Currie in an August 21, 2017, article. However, there are at least two areas where Facebook intends to "productize" their research: language translation and image classification.

Facebook's primary advantage in AI research is the incredible volume of data it has to work with. The training models the FAIR team develops may have millions of parameters to weight and bias. The resulting dataset may be "tens of terabytes" in size, according to Currie. In addition to the multi-terabyte datasets, the company's deep neural net (DNN) training must support computer vision models requiring from 5 to more than 100 exa-FLOPS (floating point operations per second) of compute and billions of I/O operations per second.

Until recently, Apple wasn't saying much about its AI plans. As the Wall Street Journal's Tripp Mickle points out in a September 3, 2017, article, Apple's historic penchant for secrecy may work against its AI efforts because researchers prize the opportunity to publish and discuss their AI work openly. Working in Apple's favor is the ability of researchers to influence the consumer products that will put AI into the hands of millions, perhaps billions of people.

While it often feels like AI and other paradigm-shifting technologies come on us in a rush, the reality is that the trip from the lab to the street is long and slow, filled with many twists and turns. And like the fabled contest between the tortoise and the hare, the race doesn't always go to the swiftest.

_original.jpg)

_original.jpg)

Multifactor authentication and single-sign on will account for larger shares of the global cloud identity access management market in 2020. Source:

Multifactor authentication and single-sign on will account for larger shares of the global cloud identity access management market in 2020. Source: